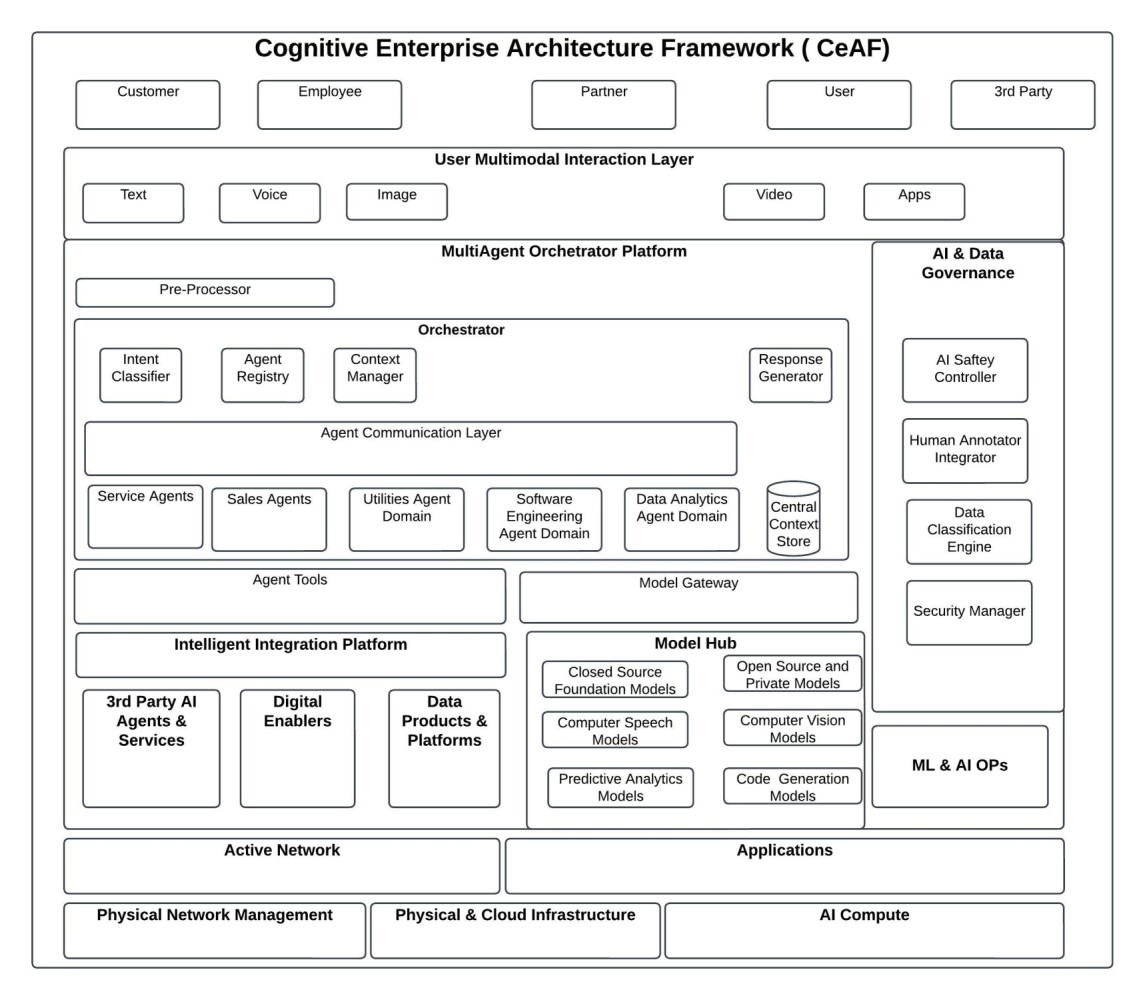

In my previous LinkedIn article, “The AI Adoption Imperative: Why Enterprises Need a Unified Framework,” I introduced the Cognitive Enterprise Architecture Framework (CeAF)—a structured approach to embedding intelligence across organizations. Today, let’s dive deeper into one of its foundational components: the Pre-Processor Layer, the intelligent gateway that makes AI-driven workflows possible by handling diverse customer inputs seamlessly.

The Pre-Processor Layer: Making AI Smarter, One Input at a Time

Think about how you interact with businesses today compared to just a few years ago. You might still want the same things—buying a new phone, upgrading your broadband tariff, or getting help when your internet slows down—but the way you do these tasks has changed dramatically. Now, instead of just calling customer service, you might snap a photo of your router’s blinking lights or send a voice note asking, “Why is my internet slow?”

This shift toward diverse, multimodal interactions is exactly why enterprises need something called the Pre-Processor Layer—a key component of the Multi-Agent Orchestrator Platform (MOP) within the Cognitive Enterprise Architecture Framework (CeAF).

Why Does the Pre-Processor Layer Matter?

Customers today communicate using text, images, voice notes, videos, and even sensor data from IoT devices. Without an intelligent way to handle these varied inputs, businesses risk inefficiencies and poor customer experiences. The Pre-Processor Layer solves this by:

- Normalizing Inputs: Converting raw data (like voice notes or screenshots) into structured formats such as JSON.

- Adding Context: Enriching inputs with metadata like timestamps and customer IDs to ensure accurate downstream processing.

- Multimodal Fusion: Combining insights from multiple input types (e.g., audio and images) to create a unified understanding.

- Real-Time Streaming: Managing interactions quickly and seamlessly to deliver immediate responses.

What Exactly Does the Pre-Processor Do?

Let’s break down some of its key functionalities in simple terms:

- Image Processing:

- Audio Processing:

- Video Processing:

- Sensor Data Processing:

- Multimodal Fusion:

A Real-Life Scenario: Broadband Customer Support

Imagine you’re frustrated with slow internet speeds. You send your broadband provider a quick voice note—”Why is my internet slow?”—along with a screenshot showing disappointing speed test results.

Here’s how the Pre-Processor Layer handles it:

- Voice-to-Text Conversion: Your voice note is transcribed into text instantly.

- Image Analysis: The screenshot is analyzed automatically to extract precise metrics like download/upload speeds and latency.

- Contextual Enrichment: Additional information—your customer ID, timestamp, previous service history—is added for context.

- Combining Insights: The system merges your transcribed question and extracted screenshot metrics into one structured format.

- Actionable Output: A neatly packaged JSON file is sent downstream to diagnostic agents who quickly identify your issue and propose solutions.

Best Practices for Implementing the Pre-Processor Layer

To ensure this layer works seamlessly, enterprises should:

- Support diverse input types (text, audio, images) for flexibility.

- Dynamically select optimal AI models based on specific tasks.

- Add contextual metadata to enhance accuracy.

- Ensure compliance with privacy standards like GDPR through anonymization.

- Optimize workflows for scalability and low-latency performance.

Key Architecture Principles

The Pre-Processor Layer should be built on principles such as:

- Modularity: Separate modules for each input type for easy scaling.

- Extensibility: Easy integration of new AI models without disrupting existing workflows.

- Error Resilience: Fallback mechanisms (like human annotations) for ambiguous inputs.

- Real-Time Processing: Prioritise low-latency operations for immediate responsiveness.

Wrapping Up

The Pre-Processor Layer isn’t just another technical component—it’s the intelligent gateway that makes modern customer interactions seamless and efficient. By transforming diverse multimodal inputs into structured insights enriched with context, it empowers enterprises to deliver faster, smarter, and more personalized experiences.

In the next week’s article, we’ll dive deeper into how the Orchestrator—the “brain” behind MOP—uses these processed inputs to drive intelligent decision-making across your organization.